- Three Key Questions Needing Answers Within Three Minutes When You Suspect a Breach

- Using Deception and Endpoint Logs to Backtrack Command and Control

- Improving SOC Triage Workflow with Prevention Failure Detection

What could possibly go wrong? Plenty.

RACE TO THE BOTTOM (OF THE ALERT PILE)

Most organizations utilize a Security Information Event Management (SIEM) solution in their SOC to aggregate, correlate and prioritize alerts presented to the front line SOC Analyst. Initial triage of alerts is generally handled by a Level I Analyst – often the newest, and most inexperienced members of the team. With Network-based IDS often spitting out 40 events per second along with a myriad of other security solutions and operating / application logs feeding into the SIEM, it is a daunting task to keep up with the alerts on the screen. To further increase the pressure, SOC Analysts are usually expected to triage an alert in three minutes or less. Get it right, you live to triage another day; get it wrong, your stock price tumbles, people lose jobs and your company gets a ton of negative press.

Security Teams need to do three things to make their lives better:

- Refocus front-line triage on Prevention Failure Detection (PFD)

- Prioritize solutions that provide high-fidelity, but low-volume alerts during triage

- Enable correlations that answer three key questions every SOC Analyst must know when investigating alerts

PREVENTION FAILURE DETECTION

I first heard the term “Prevention Failure Detection” from a friend of mine, Tim Crothers, Vice-President of Cyber Security for Target Corporation. PFD refocuses your detection capabilities away from trying to detect everything happening in your environment and instead focus your detection efforts on where your prevention capabilities are most likely to fail. It also focuses your alerting, visualizations and SOC displays not on everything that has been seen, but only what your PFD workflows say is important. You don’t need pie charts and bar graphs telling you how many times your AV quarantined a file or your firewall blocked an access attempt – those distract your team away from the alerts that matter. You want to establish clear hunting grounds for your SOC and Incident Response teams to focus their efforts.

HIGH-FIDELITY ALERTS

If you knew an alert was a true positive every time it fired, how would that impact your workflows and decision process in handling that particular incident? High-Fidelity alerts essentially mean you can trust and act on the information contained within the alert. They also tend to be very low in volume (unless you’re having a really bad day). There are not many solutions out there that can claim zero false positives (and I would be wary of any vendor that does make that claim!); however, let’s consider how Deception Solutions rate when looking at Fidelity and Alert volume.

Deception and High-Fidelity

Deception-based solutions utilize decoys and misinformation to divert and delay an adversary giving the SOC / IR teams sufficient time to perform remediation before the adversary can complete his mission. Deception objects are not known to normal end-users and are white-listed against allowed vulnerability and IT Asset Discovery scanning systems in the organization – so no one should ever touch a deception decoy. Let’s consider the possible ways a decoy could be touched:

- Network Misconfiguration – a scanner was missed in the whitelist or some other misconfiguration causes a system to attempt communications with a decoy

- Curious Insider – an end-user or system administrator pokes around outside of their normal duties, comes across a decoy and reaches out to see what the system is all about

- Malicious Insider – an end-user or system administrator is looking to steal information or cause disruption and stumbles across a decoy while looking for the crown jewels

- External Adversary – an adversary of varying skill level and resources has evaded your prevention layers and is now poking around inside your network

In all four cases, some type of action is required that demands immediate attention. The first two are not malicious in nature and will most likely involve different groups resolving the issue other than the security teams (most likely Network Operations for the first and Human Resources for the second). The last two are malicious and require immediate escalation and gathering of additional information to learn the full nature of the attack.

Deception and Low-Volume

Deception is a Breach Detection Solution. By that we mean that Deception is not generally used to detect Intrusion Attempts or even Breach Attempts. Deception is a great Prevention Failure Detection solution because it focuses detection capabilities on adversaries and malware that have already successfully bypassed your prevention capabilities.

If we take a typical breach scenario, an adversary will spear-phish an end-user, get them to click on the malicious attachment or link, a payload gets downloaded and/or detonated on the end-user’s system and command and control is established between the adversary and the compromised system.

Breach Accomplished. The Doomsday Clock starts ticking.

Many security solutions had to fail for this to happen. This first beachhead is not the mission of the adversary, they want your data or to disrupt your operations. They must establish additional beachheads, reach out to application and database servers, map out your organization’s assets and determine what are likely targets. Most intrusion / breach attempts will be blocked by your prevention technologies, you aren’t losing sleep over those. It is the ones that get through you need to lose sleep over – and this is where Deception solutions step in and present the adversary with inviting targets – targets that only an adversary should be touching.

Because of this, Deception alerts are few and far between (as I said earlier, unless you’re having a really bad day!)

THREE KEY QUESTIONS EVERY TRIAGE ANALYST MUST ANSWER

Whenever I have seen an analyst investigating a network-based alert (alerts that only contain IP addresses, ports and service information) there are always three questions at the top of their minds – and if they had the answers would greatly speed up the time to triage as well as accuracy of any decisions made.

- User – What is the endpoint user session responsible for causing this alert to occur (I.E.: Which user clicked on something they shouldn’t have?)

- Process – What is the endpoint user session’s process and parent process responsible for causing this alert to occur (I.E.: Was it a user’s interactive program like Chrome or Firefox, or was it an underlying process that normally doesn’t communicate like Explorer?)

- Network – Who else has this system communicated with in the past few minutes of this alert being generated?

Answering Question #1 lets me focus on what roles and permissions that user has so I can determine the potential extent of a breach (does this user have local admin rights? Is it a domain administrator?).

Answering Question #2 can tell me if this was a user-initiated action (John clicked a website in Chrome and downloaded a malicious payload) or an adversary-initiated action (Adversary through an injected svchost process downloaded mimikatz to the workstation). One is pre-breach/pre-detonation, the other is post-breach requiring a different level of urgency.

Answering Question #3 tells me if the adversary has laterally moved, can I identify potential command and control servers or if a malware detonation is spreading to other systems.

PREPARING FOR THE APOCALYPSE

Now that we know some key questions needing answers, we turn our attention to high-fidelity alerts that are focused around Prevention Failure Detection. We need to identify potential base and correlated events that will help us realize our vision. Once the logs, alerts and workflows have been identified, we can begin building out content that will make alert triage efficient and actionable.

Base Logs and Alerts

This paper will not address every potential log source and operating system; however, the concepts should be universally applied to your environment. For purposes of this paper, we will focus on Windows Workstations (because they are one of the most likely points of first breach) for our event source and ArcSight and Splunk (because that’s what I know) for our SIEM correlation efforts. As always, there may be more than one method to accomplish the goal, organizations should explore what methods fit best to their environments.

Event Logs

| Log Source | Description | Key Fields Required |

| Windows Endpoint Log – Sysmon | Sysmon is a Microsoft Sysinternalstools that can be installed on Windows Workstations that provide additional logging capabilities. Sysmon will allow us to log network connections with associated process information. | Network Tuple

Process ID User Session |

| Windows Endpoint Log – Security | The Windows Security Event file contains log entries for all processes created (and terminated) on the endpoint. Process creation/termination auditing must be enabled in the security policy. | User Session

Process Name Process ID Parent Process ID |

| High-Fidelity Network-based Alert | This can really come from any source so long as you consider it to be a high-fidelity alert that you always want correlated against the other event sources described above. For this paper we will be using Deception-based Network alerts from the AcalvioShadowPlex solution. | Network Tuple |

CORRELATING HIGH-FIDELITY ALERTS

The first step in defining solid correlation use cases is being able to define and understand the problem.

Let’s review a (highly simplified) diagram of a typical breach by an adversary. The attacker sends a spear-phishing email to an end-user who opens the attachment or clicks on the link. The detonated process establishes persistence to ensure it will run if the system is rebooted or the user logs out/in. When this process runs, it will usually inject itself into a legitimate process and establish command and control (C&C) back to the adversary. From his remote system, the attacker will direct activities through the C&C channel and use tools that he downloads, or built-in system programs, to laterally move to other targets in the environment.

Correlate:

- Deception-based Alert

- Sysmon Network Process Log

Correlate:

- Sysmon Network Process (above)

- Process Creation Log

Correlate:

- Process Creation Log (above)

- Parent Process Creation Log

ARCSIGHT HIGH-FIDELITY BREACH ALERT CORRELATION EXAMPLE

Here is what the associated logic would look like in the ArcSight Common Condition Editor (CCE):

SPLUNK HIGH-FIDELITY BREACH ALERT CORRELATION EXAMPLE

Here is what the associated logic would look like in the Splunk Search Interface if sending the events in tagged-field format:

| Deception and Matching Endpoint Events |

| (“NETWORK CONNECTION DETECTED” AND “MICROSOFT-WINDOWS-SYSMON/OPERATIONAL” AND NOT “SOURCEISIPV6: TRUE”) OR (“ACALVIO|SHADOWPLEX”) | REX “CEF:1\|ACALVIO\|.* SRC=(?<DECEPTIONSOURCE>\D{1,3}\.\D{1,3}\.\D{1,3}\.\D{1,3})” | REX “CEF:1\|ACALVIO\|.* DST=(?<DECEPTIONDESTINATION>\D{1,3}\.\D{1,3}\.\D{1,3}\.\D{1,3})” | REX “CEF:1\|MICROSOFT\|.* SRC=(?<SYSMONSOURCE>\D{1,3}\.\D{1,3}\.\D{1,3}\.\D{1,3})” | REX “CEF:1\|MICROSOFT\|.* DST=(?<SYSMONDESTINATION>\D{1,3}\.\D{1,3}\.\D{1,3}\.\D{1,3})” | REX “SPROC=(?<SOURCEPROCESS>\S.*?\ )[A-Z].*?\=?” | REX “CEF:1\|ACALVIO\|(.*?)\|(.*?)\|(.*?)\|(?<IDSALERT>.*?)\|” | EVAL TESTSIP=COALESCE(DECEPTIONSOURCE,SYSMONSOURCE) | EVAL TESTDIP=COALESCE(DECEPTIONDESTINATION,SYSMONDESTINATION) | TRANSACTION TESTSIP TESTDIP MAXSPAN=5M | SEARCH “ACALVIO|SHADOWPLEX” SYSMON EVENTCOUNT>1 | EVAL “COMPROMISED IP”=TESTSIP, “DECOY IP”=TESTDIP, “COMPROMISED USER SESSION NAME”=SUSER, “COMPROMISED PROCESS NAME”=SOURCEPROCESS | TABLE _TIME IDSALERT,”COMPROMISED IP”, “DECOY IP”, “COMPROMISED USER SESSION NAME”, “COMPROMISED PROCESS NAME” |

RELATED COMMUNICATIONS FROM SUSPECT ENDPOINT

Now that I have a high-fidelity alert correlating against endpoint process logs, I also want to be able to gather up any other communications the compromised endpoint transmits. The goal is to help the analyst identify the attacker’s C&C channel more effectively.

Matching Communications to Breach Alert

Here is an example of the correlation rule in the ArcSight CCE, we are aggregating all matching events within a seven-minute time window:

PUTTING IT ALL TOGETHER

With this correlation in place, the SOC Analyst has visibility into systems involved, program/process information, user session and the parent process ID responsible for the security alert.:

QUESTION 1: WHO IS THE RESPONSIBLE USER AND PROCESS?

This correlation clearly tells me it is Steve’s user session running firefox that reached out and touched the Deception Decoy.

QUESTION 3: WHAT IS THE PARENT PROCESS FOR THE RESPONSIBLE COMMUNICATION?

As we see in Question 1, the Parent Process ID of the process that generated the alert is 2340. I can pivot in my logs searching for the Process Creation event for this Process ID to find out which process is most likely injected with malicious code. Bear in mind this process could have been created when the user session originally started, Active Lists in ArcSight and additional correlation techniques could help locate this faster, but are a topic for another day.

This pivot shows me that the Explorer.exe process is the parent process responsible for creating the task that reached out and touched my Deception Decoy.

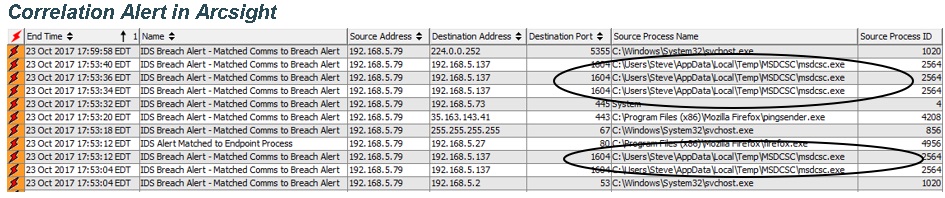

Correlation Alert in Arcsight

WHAT REALLY HAPPENED

So, did the correlated events present an actual representation of how the attack really happened? Let’s find out.

To create the attack, I used the DarkComet Remote Access Trojan (RAT) to take command and control of my “victim” system. I attached the RAT in an email and sent it to my target user (Steve).

When Steve opened the attachment, the RAT detonated and established a C&C link back to the control system (msoutlookg). The DarkComet program planted itself in Steve’s Local/Temp directory using the name MSDCSC.EXE. Through DarkComet’s Remote Desktop capability, I launched FireFox from the user’s desktop (Explorer.exe) and reached out to an HTTP Deception Decoy – generating the high-fidelity alert.

As you can see, all these activities were correlated and captured providing clear context to the analyst as to what happened. And it all started from a single high-fidelity alert.

CONCLUSION

It is not enough for organizations to keep pumping all types of security events into SIEMs and hoping they get correlated and prioritized appropriately for the Level I Analyst. The triage process needs to focus on Prevention Failure Detection utilizing high-fidelity alerts combined with use case focused correlations that answer the key questions accurately and efficiently. Knowing the user session involved in the breach, processes responsible for communications, and other network communications involving a breached system are critical to rapidly isolating and remediating the compromise.

Utilizing Deception-based alerts with endpoint logs, SIEM can deliver on its capability to correlate alerts that matter.

The Apocalypse has been averted.

Want the Full PDF with endpoint configuration and sub-parser code? Download here.

John Bradshaw, Sr. Director, Solutions Engineering at Acalvio Technologies, has more than 25 years of experience in the Cyber Security industry focusing on advanced, targeted threats. He held senior leadership roles at Mandiant, ArcSight, Internet Security Systems, Lastline, and UUNET.